To What Degree Can We Design Society?

Disentangling Collective Intelligence and Artificial Social Engineering

Note: this post was originally published on my Medium account.

Introduction: Governance and Intelligence

On a practical level, improving society consists largely of coming up with solutions to the innumerable problems that arise during its evolution. These object-level questions are difficult or impossible to predict in advance, however. On top of that, very few of them can be resolved to the satisfaction of all parties involved even in theory, let alone in practice. We are left with a meta-level question: what systems, if implemented, would improve society’s ability to solve problems when they arise? Since there is no objective “best answer” to any given social question, we are forced to think about which overall systems are likely to produce better answers.

This is a question of what might be called intelligence, broadly construed. How can we make our society more generally intelligent — that is, more able to resolve problems and produce good outcomes?

Implicitly, this is one of the questions that Plato was answering when he kicked off the Western political conversation. Roughly, the answer he offers in The Republic is that the wisest society is the one in which the wisest people — Plato’s “guardians” or “philosopher kings” — are in charge. This is an extremely intuitive answer to the question of social-level intelligence, to some degree motivating the meritocratic and autocratic ideals alike. Both ideologies, in their naïve extreme, assume that the individual is the only source of intelligence, and therefore that good governance is a matter of putting the smartest, wisest people in charge.

History tells us otherwise. In the twentieth century, democracy triumphed over autocracy. The popular narrative, in hindsight, is that the democratic system was more effective than, e.g., the fascist one: not because the democratic leaders were more intelligent than the fascist ones, but because the democratic system utilized the intelligence of the whole society more effectively. It’s difficult to say how true exactly this political narrative is — after all, the Axis could have won — but the economic story from the past few centuries is much more clear-cut. Open, market-capitalist systems dramatically outperformed economies which tried to implement top-down control and central planning.

One of the core assumptions of the democratic market-capitalist paradigm of the modern West is that large groups of people — whole societies — are capable of fumbling their way to good social-level outcomes, without any one intelligent entity in charge. And, as we saw in the 20th century, it’s just empirically true that this approach… well, it works pretty damn well. At least, as Churchill famously observed, relative to other approaches. The remarkable discovery of modernity has been that, like a perfectly synchronized flock of birds, the mass of humanity can generate adaptive bottom-up behavior without any genius architect behind the scenes.

The Field of Collective Intelligence

The past few decades have found a pretty good term to describe this phenomenon: collective intelligence, or, in the researcher’s parlance — more on that in a moment — CI. There are several popular definitions offered in the academic literature, but the one I like the best comes from Malone, Laubacher, and Dellarocas 2009: collective intelligence is “groups of individuals acting collectively in ways that seem intelligent.” Setting aside the slippery concept of intelligence, the simple and undeniable reality is that groups without top-down intelligent control often perform amazingly well.

One of the earliest general-audience expositions of this argument appears in James Surowiecki’s 2004 book The Wisdom of Crowds, which tours a wealth of examples and a substantial body of research with the thesis that “under the right circumstances, groups are remarkably intelligent, and are often smarter than the smartest people in them” (p. XIII). This book played a large role in framing the modern conversation around CI, but the seeds of the idea have been around for much longer. French cultural and media theorist Pierre Lévy was writing about collective intelligence a decade earlier in the 1994 book L’intelligence collective: Pour une anthropologie du cyberspace (Collective Intelligence: Mankind’s Emerging World in Cyberspace). The first known (Malone and Bernstein 2015, Sassi et al. 2022) academic paper with the term “collective intelligence” in the title was Weschsler’s 1971 paper “Concept of collective intelligence.” H.G. Wells published a book called World Brain in 1936 and entomologist William Morton Wheeler coined the term “superorganism” in 1911. Condorcet’s Jury Theorem (first expressed in 1785) “puts the law of large numbers in the service of an epistemic argument for majority rule”; in other words, it gives “proper analytical shape” to the idea of collective intelligence (Landemore 2013, p. 54). Likewise, Adam Smith’s invisible hand can be viewed as a CI-aligned idea. Even Aristotle wrote in Politics that “The many, who are not as individuals excellent men, nevertheless can, when they have come together, be better than the few best people, not individually but collectively.”

Over the past two decades, a field has coalesced around formalizing and explaining these observations. It is not united by any one disciplinary boundary, nor by a set of shared methods. Rather, CI is a “transdisciplinary” (Yang and Sandberg 2022) inquiry into the mechanisms which give rise to the phenomena we’ve discussed. What has it found?

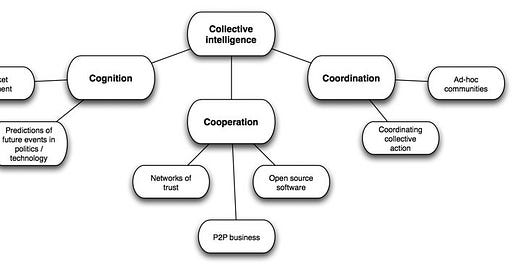

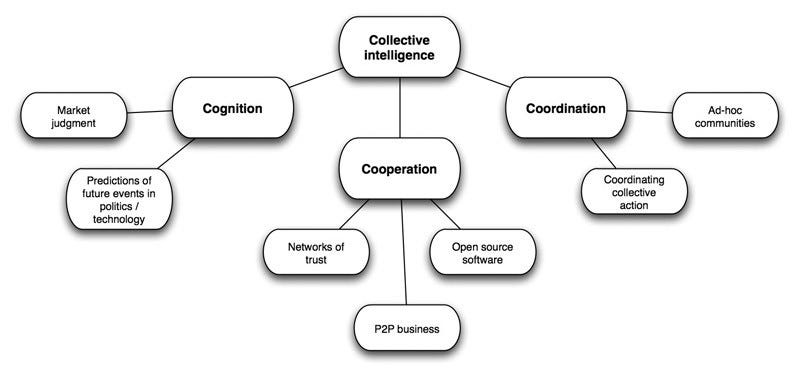

Overall, Vicky Chuqiao Yang, a CI researcher at MIT, says, “what we know about CI exists as bits of effects that are often not connected.” CI is far from a singular, well-organized theory. There is some popular vocabulary floating around, and various attempts at broad taxonomizing (see He et al. 2019 for a relatively recent example). However, the taxonomy originally introduced by Suroweicki 2004, which groups CI into categories of cognition, coordination, and cooperation, is still widely used today.

The stickiness of this scheme points more to the lack of a truly unifying framework in the field than to its own synthesizing power. As for those specific effects, here’s a small sample of the kind of results which show up in the CI literature:

Cognitive diversity seems to increase CI in groups (Suroweicki 2004, Page 2019, Aminpour et al. 2021).

There’s a statistical factor in small groups that predicts out-of-sample performance on a wide variety of tasks — that is, groups that perform well on one task are also likely to perform well on others. This is analogous to the “g-factor” in individual intelligence (the quantity which IQ tests measure) which correlates performance on many cognitive tasks. This result was first reported in Woolley et al. 2010 and was strengthened by Reidl et al. 2021. The latter paper also reports that the social perceptiveness of individual group members is very predictive of group CI, and as such a high proportion of women — who are generally more socially perceptive than men — is associated with a high CI (Yang and Sandberg 2022, Reidl et al. 2021). The general consensus seems to be that the “group collaboration process is more important in predicting CI than the skill of individual members” (Reidl et al. 2021).

We know that the extent to which individuals communicate and the topology of their social network effects CI, but it’s not exactly clear how. Suroweicki 2004 reports that CI is increased by “independence” among individuals, i.e. CI is higher among groups where individuals don’t communicate, or at least in which their decisions don’t depend on each other’s. This may be true in the narrow “estimation” contexts that Suroweicki was discussing (like guessing the weight of a cow or the number of jellybeans in a jar), but the picture has been complicated by later studies (e.g. Yang et al. 2021, Almaatouq et al. 2019).

That being said, it’s clear that CI can fail in the presence of “groupthink.” As Yang and Sandberg 2022 put it, “The bottom line is that most people blindly following others does not lead to good outcomes in most scenarios.”

We have some rules of thumb about how to increase CI in organizations (see the Nesta Centre for Collective Intelligence Design and their playbook, for instance), but they are very much just that: rules of thumb.

We do not know how to improve CI in society as a whole (nor how exactly to conceptualize such a thing, if it exists). As Yang says, “research does not yet offer recipes for constructing a good human collective” (Yang and Sandberg 2022). We do not know the upper bound of CI, that is, how much we could expect to gain, socially, in the optimum possible situation. We do not know exactly why CI arises, in full detail. Overall, we simply don’t have a framework that answers these questions; in fact, we don’t know if they’re answerable at all within our current paradigm.

In a way, CI is simply a useful term which references an undeniable observation: groups often exhibit a kind of intelligence which does not derive straightforwardly the intelligence of their constituent members. Markets and democracies just are collectively intelligent, insofar as they achieve good outcomes without the direction of a single mind. We really do coordinate in fascinating and often highly effective ways which nobody fully understands, and no amount of central planning will be able to bring all human affairs under some individual’s control. Sometimes this observation even seems pedestrian: the human brain can be viewed as intelligence arising from the interactions of a collective of neurons, and in that sense is a CI itself (Malone and Bernstein 2015). Really, the CI concept — when extended beyond just human groups — can encompass all forms of intelligence. Rather than make it useless, this draws attention to the fact that there’s no reason the dynamics which give rise to the intelligence exhibited by individual humans can’t extend to the inter-individual level. Intelligence is a phenomenon which emerges from interaction, rather than being a basic property of the universe, and therefore it applies on the social level. That observation, on its own, is a remarkable and inspiring thing.

Artificial (Collective) Intelligence

But when organizational designers talk about collective intelligence, they’re not always just talking about the empirical nature of cognition. The Wisdom of Crowds is not only about “the world as it is,” but about “the world as it might be” (p. XIV). Likewise, CI is not only a useful descriptive term, but a vision: the launch point for an inspired & compelling narrative on what society is and how we might improve it. It’s an outgrowth of the democratic vision, one which doesn’t revere market capitalism as perfect or the US constitution as an infallible document, and which takes a more nuanced view of human nature & human social interaction, but which still sees boundless promise in a broadly enfranchised and collaborative humanity as opposed to a strict hierarchy. As Divya Siddarth, founding member of the Collective Intelligence Project, puts it in a recent Wired article: “Adopting a frame of collective intelligence lets us see existing democracy as a starting point, rather than as a finished project.”

This vision has been present in the discussion of CI from the start, and is often not clearly distinguished from the empirical reality. For example, the mission of the MIT Center for Collective Intelligence, which was founded in 2006 and has been one of the nuclei of the field since, is to explore “how people and computers can be connected so that — collectively — they act more intelligently than any person, group, or computer has ever done before.”

So when people talk about collective intelligence, they’re often really talking about the prospect of ultimately engineering a kind of artificial collective intelligence. The premise is that — enabled by computing and communication technology and guided by social science — we can paradigmatically improve the way society processes information. This idea has always been an inspiring part of the CI movement, whether or not its advocates would use the terminology I’ve chosen. Indeed, versions of it have been present since the early days of the internet.

Is it Possible to Artificially Upgrade Collective Intelligence?

To believe in the plausibility of artificially engineering collective intelligence, you need to take things a step further than CI, the empirical observation, will get you. You need to believe not only that CI exists, but that we know roughly how to improve it — or at least that major improvement is possible. I think that people sometimes implicitly bundle these two propositions, taking the science which shows that CI exists and using it to argue for a specific kind of social intervention. In part, what I’m trying to do in this article is disentangle that rhetoric: just because collective intelligence exists as a phenomenon does not mean that a less hierarchical system will inevitably be more collectively intelligent. The exciting consequence of the CI phenomenon is that group intelligence is not necessarily bounded by individual intelligence. But that doesn’t mean that whatever new system you come up with — be it the internet, an organization without traditional corporate hierarchy, or a social movement — will happen to have more collective intelligence than a traditional organization. This might be obvious to some, but for me, at least, it took a while to really verbalize. I think I deserve some sympathy, though: it’s not as though all of the writing on this subject makes this distinction clear. For example, in the Wisdom of Crowds, Suroweicki says: “if you put together a big enough and diverse enough group of people and ask them to ‘make decisions affecting matters of general interest,’ that group’s decisions will, over time, be ‘intellectually [superior] to the isolated individual,’ no matter how smart or well-informed he is” (p. XVII). But I just don’t think that the available science convincingly demonstrates such a general statement.

In fact, in the general case, it’s not clear to me that saying one social arrangement is “more” collectively intelligent than another is even coherent. As mentioned above, there appears to be a statistical factor which predicts some kind of small group CI (Reidl et al. 2021), but there’s no well-defined analogy for whole societies.

It’s a bit like the early history of AI. At a workshop held at Dartmouth in the summer of 1956, widely considered to mark the foundation of AI as a field, researchers recognized that there’s no reason, in principle, that manmade systems shouldn’t be able to exhibit intelligence. This realization led those early researchers to extreme optimism about the near-term promise of AI; in 1965, Herbert Simon (one of the 20 or so participants in the original Dartmouth workshop) infamously said “machines will be capable, within twenty years, of doing any work a man can do” (Crevier 1993). But the practitioners of what’s nowadays called Good Old-Fashioned AI didn’t actually possess as complete a model of how human intelligence arises as they thought, and it took decades of experimentation to produce genuinely powerful AI.

There are increasing numbers of people who, like those early AI researchers, believe that humans will soon understand the mechanisms which give rise to CI to the point where we can actively amplify them in our own society. And, as in the case of AI, there doesn’t seem to be any reason why artificial collective intelligence engineering shouldn’t be possible in principle. It’d be awfully strange if a paradigm largely invented by a bunch of statesmen hundreds of years ago happened to be anywhere close to optimal. That’s an oversimplification, but the basic point makes sense. Just looking around at the sociotechnical information processing landscape — academia, traditional news media, existing social media, and so on— it’s hard to believe there’s not a much better way of doing things.

So insofar as society-level intelligence is a specific thing, a society possessing more of it ought to be possible. But how much better things could get is an open question, constrained in part by our lack of understanding of what CI, at the societal level, really ought to mean. What’s the upper bound of CI in human society? And how do we get there?

Society is Already Engineered

When I introduced the idea of artificially upgrading collective intelligence, I said that the premise is to “paradigmatically improve the way society processes information.” “Collective intelligence” may or may not be the right frame for looking at all this, but one thing is clear: we already have fundamentally changed the way society processes information. Several times, in fact.

Writing, printing, markets, and democracy may all be seen as technologies for (artificially) improving society-level collective intelligence. The reason the internet has always been a big deal is because it represents exactly the kind of paradigm-shifting social technology we’re talking about, although it’s not always clear that it’s straightforwardly improving collective intelligence.

These are not novel observations. Rather, the useful statement is that to some degree our society’s sensemaking apparatus is already properly being engineered. In the policymaking and macroeconomic senses, yes, but also in the straight-up hardware, software, people-doing-it-are-called-engineers sense. I’m talking about social media algorithms, of course, which govern the distribution of a massive amount of information every day. As a 2021 paper which argues that the study of collective behavior should emerge as a crisis discipline, alongside things like medicine and climate science, puts it, “There is no viable hands-off approach. Inaction on the part of scientists and regulators will hand the reins of our collective behavior over to a small number of individuals at for-profit companies” (Bak-Coleman et al. 2021).

While we may not yet have a complete science of collective intelligence, some kind of very explicit engineering on the “world brain” is already taking place. So the question we need to ask ourselves is not whether we should profoundly change the way humanity thinks, but in what way?

References

Almaatouq, A., Noriega-Campero, A., Alotaibi, A., Krafft, P. M., Moussaid, M., & Pentland, A. (2020). Adaptive social networks promote the wisdom of crowds. Proceedings of the National Academy of Sciences, 117(21), 11379–11386. https://doi.org/10.1073/pnas.1917687117

Aminpour, P., Gray, S. A., Singer, A., Scyphers, S. B., Jetter, A. J., Jordan, R., Murphy, R., & Grabowski, J. H. (2021). The diversity bonus in pooling local knowledge about complex problems. Proceedings of the National Academy of Sciences, 118(5), e2016887118. https://doi.org/10.1073/pnas.2016887118

Aristotle. (2013). Aristotle’s “Politics.” University of Chicago Press.

Bak-Coleman, J. B., Alfano, M., Barfuss, W., Bergstrom, C. T., Centeno, M. A., Couzin, I. D., Donges, J. F., Galesic, M., Gersick, A. S., Jacquet, J., Kao, A. B., Moran, R. E., Romanczuk, P., Rubenstein, D. I., Tombak, K. J., Van Bavel, J. J., & Weber, E. U. (2021). Stewardship of global collective behavior. Proceedings of the National Academy of Sciences, 118(27), e2025764118. https://doi.org/10.1073/pnas.2025764118

Collective Intelligence Design Playbook (beta). (n.d.). Nesta. Retrieved October 1, 2022, from https://www.nesta.org.uk/toolkit/collective-intelligence-design-playbook/

Crevier, D. (1993). AI: The Tumultuous History of the Search for Artificial Intelligence. BasicBooks.

He, F., Pan, Y., Lin, Q., Miao, X., & Chen, Z. (2019). Collective Intelligence: A Taxonomy and Survey. IEEE Access, 7, 170213–170225. https://doi.org/10.1109/ACCESS.2019.2955677

Landemore, H. (2013). Democratic Reason: Politics, Collective Intelligence, and the Rule of the Many. Princeton University Press.

Lévy, P. (1997). L’intelligence collective: Pour une anthropologie du cyberspace. La Découverte.

Malone, T. W., & Bernstein, M. (2015). MIT Handbook of Collective Intelligence.

Malone, T. W., Laubacher, R., & Dellarocas, C. (2009). Harnessing Crowds: Mapping the Genome of Collective Intelligence (SSRN Scholarly Paper №1381502). https://doi.org/10.2139/ssrn.1381502

MIT Center for Collective Intelligence. (n.d.). Retrieved October 4, 2022, from https://cci.mit.edu/

Page, S. E. (2019). The diversity bonus: How great teams pay off in the knowledge economy. Princeton University Press.

Plato. (2012). The Republic: The Influential Classic. John Wiley & Sons.

Riedl, C., Kim, Y. J., Gupta, P., Malone, T. W., & Woolley, A. W. (2021). Quantifying collective intelligence in human groups. Proceedings of the National Academy of Sciences, 118(21), e2005737118. https://doi.org/10.1073/pnas.2005737118

Sassi, S., Ivanovic, M., Chbeir, R., Prasath, R., & Manolopoulos, Y. (2022). Collective intelligence and knowledge exploration: An introduction. International Journal of Data Science and Analytics, 14(2), 99–111. https://doi.org/10.1007/s41060-022-00338-9

Siddarth, D. (n.d.). To Fix Tech, Democracy Needs to Grow Up. Wired. Retrieved August 16, 2022, from https://www.wired.com/story/collective-intelligence-democracy/

Social Media and News Fact Sheet (Pew Research Center’s Journalism Project). (n.d.). Pew Research Center. Retrieved October 5, 2022, from https://www.pewresearch.org/journalism/fact-sheet/social-media-and-news-fact-sheet/

Surowiecki, J. (2004). The wisdom of crowds: Why the many are smarter than the few and how collective wisdom shapes business, economies, societies, and nations (pp. xxi, 296). Doubleday & Co.

Wells, H. G. (2016). World Brain. Read Books Ltd.

Weschsler, D. (1971). Concept of collective intelligence. American Psychologist, 26, 904–907. https://doi.org/10.1037/h0032223

Wheeler, W. M. (1911). The ant-colony as an organism. Journal of Morphology, 22, 307–326. https://doi.org/10.1002/jmor.1050220206

Woolley, A. W., Chabris, C. F., Pentland, A., Hashmi, N., & Malone, T. W. (2010). Evidence for a Collective Intelligence Factor in the Performance of Human Groups. Science, 330(6004), 686–688. https://doi.org/10.1126/science.1193147

Yang, V. C., Galesic, M., McGuinness, H., & Harutyunyan, A. (2021). Dynamical-System Model Predicts When Social Learners Impair Collective Performance (arXiv:2104.00770). arXiv. https://doi.org/10.48550/arXiv.2104.00770

Yang, V. C., & Sandberg, A. (2022). Collective Intelligence as Infrastructure for Reducing Broad Global Catastrophic Risks (arXiv:2205.03300). arXiv. http://arxiv.org/abs/2205.03300